Roadmapping

Coffee, Pastries and Product Talk

One of the best meetups that I regularly attend is the Product Manager Breakfast that is organized by the wonderful people at UserVoice. Product Manager Breakfast is a relaxed conversation between Product Manager peers. The goal of the meetup is to provide a safe place where PMs can vent, trade tips, and discuss the challenges and opportunities of the role.

This month, the topic was “PM Vs. Salesbro”. This was a highly demanded topic that kept coming up at previous breakfasts. Sales teams are often asking PMs for things in order to close deals. How do you know when to pay attention? What if they are a current customer? What if the customer is guaranteeing you a lot of money?

We talked for a full hour, each PM sharing personal experience and horror stories from the past. We all came to an agreement that product management and sales are both aimed at increasing revenue and achieving the company’s mission. PMs need to get involved with the prospect/customer conversation to validate if a new feature request is a universal need. You can’t be afraid to say no. Savvy product managers realize that their job is to try to please the greatest number of on-strategy customers and prospects as possible given the available resources. If sales fail, then product management is failing.

It’s important to clearly define the product roadmap and mission of the company and to make them known throughout the organization. Share the process of bringing a product to life with your customer/product. Make sure your sales team knows when a new feature would be bumping another potential revenue generating feature.

The opportunity to interact with other PMs in person is priceless. They understand your day to day life and can even provide solutions to challenges that you are blocked on. With that face-to-face sharing in mind, I hope to see you all at the Startup Product Summit SF2!

~Brittany

Editor’s Note: Evan Hamilton, Head of Community at UserVoice and an organizer of the Product Manager Breakfast meetup, spoke at February’s Startup Product Summit. His talk, Everyone’s Customers are Wrong & Their Data is Lying, was a big hit. Check it out for a taste of the kind of awesome talks we’ll have at Summit SF2!

This is a guest post by Brittany Martin, a product marketing manager and recent transplant to the Bay Area from Pittsburgh, PA. Prior to joining us in California, Brittany was a curator of StartupDigest, Pittsburgh. She just began a new gig at ReadyForce, and is thrilled to be on their team and on ours. Follow her @BrittJMartin and read her new blog, San Francisco via Pittsburgh.

Product Strategy Means Saying No | Inside Intercom

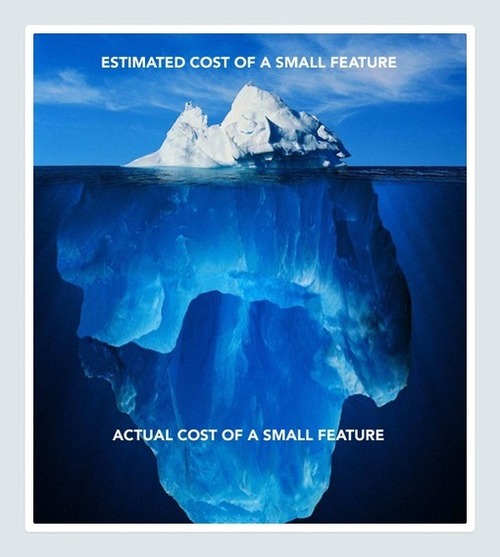

When selecting features, identifying bad ideas and killing them isn’t the hard part. Identifying good or even great ideas and still saying ‘no’ because they’re not the right fit for the product is.

Check out this amusing and practical article, with 12 arguments you’ll find you have to defend against as a product manager. My personal favorite: But it’ll only take a few minutes.

See on insideintercom.io

Key Takeaways from the Roadmapping and Execution Panel at Startup Product Summit SF

By Michelle Sun

Here are the notes for the Roadmapping and Execution Lightning Talks and Panel at Startup Product Summit SF. Omissions and errors are mine (please let me know if you find any, thank you!), credit for the wisdom is entirely the speakers’.

“Building a Great Product Through Communication” – Joe Stump, Co-Founder, Sprint.ly

- Product manager’s role is to capture, communicate and distill product ideas, and mediate between business stakeholders and makers.

- When building a product, pick two out of the three: quickly, correctly, cheaply. Joe later mentioned on Twitter that he would pick quickly and correctly, as paying for quality is no brainer.

- “Want to increase innovation? Lower the cost of failure” – Joi Ito

- Empower every developer to commit things to the product through non-blocking development (NBD).

- Advocate the move to 100% asynchronous communication because current approach is broken (needs human input to track reality) and remote teams are becoming more common.

“Raw Agile: Eating Your Own Dog Food” – Nick Muldoon, Agile Program Manager, Twitter

- Twitter does dog-fooding by allowing developers deploy to internal server. Dog-fooding allows:

- gathering real data from real (though internal) users.

- increases incentive to produce quality shipped code.

- better feedback. He found that feedback in dog-fooding environment is generally more constructive.

- keeps momentum through a positive reinforcing loop of continuous deployment and feedback. The team gets 50-100 feedback from internal users each day.

- How to decipher and sift through the volume of feedback. Look at only the “love” feedback, then all the “hate”, then discard the middle, categorize and show to the whole team.

- Other important aspects in dog-fooding:

- Automation. Allow deploy more frequently especially internally. ”On any commit, deploy internally.” Avoid accumulating technical debt.

- Visibility. Record progress and share on a wiki.

- Speed. Minimize cycle time (from to do to in progress, to done).

Best Practices for Architecting Your App to Ship Fast and Scale Rapidly” – Solomon Hykes, Founder & CEO, dotCloud

- 3 things to aim for in architecting your app: speed (continuous deployment), scale, future-proofing (be prepared for things moving very fast, avoid bottleneck and need to refactor when adding every new feature).

- What are the patterns/strategies in getting to these three goals?

- Be aware of trade-offs. There is no silver bullet; always trade-offs and prioritization.

- Trade-offs evolve over time. Priorities change. Be aware of assumptions and revisit them from time to time.

- Trade-offs differ from team to team. Be aware of bias in different teams. Always keep ownership of key decisions.

- Put yourself in a position where you are embarrassed, and things are going to happen faster.

“Rocket Powered Bicycles: Avoiding Over and Under Engineering your Product” – Chris Burnor, Co-Founder & CTO, GroupTie & Curator, StartupDigest

- A product connects business priorities with user experience.

- Proposes that instead of Minimum Viable Product (MVP), think about Product: Viable Minimum (PVM). Focus on viability.

- A scientific method to approaching product roadmapping.

- Idea: think about business priorities, user experience. Do not let technical decisions drive your product. Let product drive your technical decisions.

- Test: Viability of the solution is whether it solves the problem it’s setting out to solve. Determine what level of viability is suitable in different stages: GroupTie’s first viable minimum was a keynote presentation that was sent to potential customers.

Scale of tests will vary. Lack of big tests means the lack of breakout growth/ideas, lack of small tests means the team is doing too much. - Conclusion: Debriefing phase is vital, share test results with the team and learn what it means to the idea. Testing without debriefing is like “talking without listening” in a conversation.

- An unusual example of a PVM is Apple. Product first: cares about user experience and business priorities. Viability second: it just works. Minimalism third: wait till a technology is ripe before adding to the product (no LTE for a long time, no RFID).

Notes on other panels:

About The Author

Michelle Sun is a product enthusiast and python developer. She worked at Bump Technologies as a Product Data Analyst and graduated from the inaugural class of Hackbright Academy. Prior to Hackbright, she founded a mobile loyalty startup. She began her career as an investment research analyst. When she is not busy coding away these days, she enjoys blogging, practicing vinyasa yoga and reading about behavioral psychology. Follow her on Twitter at @michellelsun and her blog.

Objectively Making Product Decisions

by Joe Stump

Deciding which mixture of features to release, and in what order, to drive growth in your product is difficult as it stands. Figuring out a way to objectively make those decisions with confidence can sometimes feel downright impossible.

On November 12th, we released Sprint.ly 1.0 to our customers. It was a fairly massive release with core elements being redesigned, major workflows being updated, and two major new features. The response has been overwhelmingly positive. Here’s an excerpt from an actual customer email:

“Well, I’ve just spent some time with your 1.0 release, and I think it’s wonderful. It’s got a bunch of features I’ve been sorely missing. To wit:

- Triage view – a Godsend or, no he didn’t?!

- Single-line item view – where have you been all my life?

- Convenient item sorting icons – OMG, how did you know?

- Item sizing, assigning, following icons everywhere – spin us faster, dad!

I’m sure there are a ton more, but these are great improvements.”

Yes, how did we know? I’m going to lay out the methodologies that we used at Sprint.ly to craft the perfect 1.0 for our users. It all begins with a lesson in survivorship bias. In short, survivor bias, as it applies to product development, posits that you’re going to get dramatically different responses to the question “What feature would you like?” when asking current customers versus former or potential customers.

LESSON 1: OBJECTIVELY EVALUATE YOUR EXIT SURVEYS

You do have an exit survey, yes? If not, stop reading this now, go to Wufoo, and set up a simple form asking customers who cancel their accounts or leave your product for input on why they left. You can take a look at ours for reference.

The problem with exit surveys and customer feedback in general is that everyone asks for things in slightly different ways. Customer A says “Android”, Customer B says “iOS”, and Customer C says “reactive design”. What they’re all really saying is “mobile”. Luckily, human brains are pretty good pattern recognition engines.

So here’s what I did:

- Created a spreadsheet and put groups along the top for each major theme I noticed in our exit surveys. I only put a theme up top if it was mentioned by more than one customer.

- I then went through every single exit survey and put a one (1) underneath each theme whenever an exit survey entry mentioned it. I’d put a one under each theme mentioned in each exit survey entry.

- I then calculated basic percentages of each theme so that I could rank each theme by what percentage of our former customers had requested that the theme be addressed.

Here’s the results:

Now I know you don’t know our product as well as yours so the themes might not make much sense, but allow me to elaborate on the points that I found most interesting about this data:

- Our support queues are filled with people asking for customized workflows, but in reality it doesn’t appear to be a major force driving people away from Sprint.ly.

- 17% of our customers churn either because we have no estimates or they can’t track sprints. Guess what? Both of those are core existing features in Sprint.ly. Looks like we have an education and on-boarding problem there.

- The highest non-pricing reason people were leaving was a big bucket that we referred to internally as “data density” issues.

After doing this research I was confident that we should be doubling down on fixing these UI/UX issues and immediately started working on major updates to a few portions of the website that we believed would largely mitigate our dreaded “data density” issues.

But how could we know these changes would keep the next customer from leaving?

LESSON 2: IDENTIFY WHICH CUSTOMERS WERE LIKELY CHURNING DUE TO “DATA DENSITY” ISSUES

We store timestamps for when a customer creates their account and a separate for when they cancel their account. This is useful data to have for a number of reasons, but what I found most telling was the following:

- Calculate the difference between when accounts are created and cancelled in number of days as an integer.

- Sum them up and group them by month. e.g. 100 churned in the first month, 50 in the second, etc.

You should end up with a chart that looks something like this:

It shouldn’t be surprising that the vast majority of people churn in the first two months. These are your trial users for the most part. The reason our first month is so high is another post for another day. What we’re really wanting to figure out is why an engaged paying customer is leaving so let’s remove trial users and the first month to increase the signal.

We get a very different picture:

In general you want this chart to curve down over time, but you can see Sprint.ly had a few troubling anomalies to deal with. Namely, there are clear bumps in churn numbers for months 5, 7, and 8.

We had a theory for why this was based on the above survey data. A large part of the “data density” issues had to do with a number of problems managing backlogs with a lot of items in them. Was the large amount of churn in months 5-8 due to people hitting the “data density” wall?

LESSON 3: TESTING THE THESIS

So far we’ve objectively identified the top reasons that people were leaving Sprint.ly as well as identifying a few anomalies that might identify customers who are churning for those reasons. Now we needed to verify our thesis and, more importantly, show those customers what we were cooking up and see if our update would be more (or less) likely to have prevented them from churning.

To do that we turned to intercom.io and set up an email to be sent out to customers that fit the following criteria:

- Had created their account more than 4 months ago.

- Had not been seen on the site in the last 2 weeks.

- Was the person who owned the account.

I also sent this email out manually to a number of customers who fit this profile that I was able to glean from our internal database as well. I got a number of responses from customers and was able to schedule phone calls with a handful of them.

From there it was a matter of showing our cards. I would hop on Skype and walk through the new design ideas, what problems we were trying to address, and asked whether or not these features would have kept them from leaving in the first place. Luckily, we had been closely measuring feedback and were pleased to find out that our efforts were not lost and that they did indeed address a lot of their issues.

CONCLUSION

Making product decisions based on customer feedback can be difficult. The more you can do to increase signal over noise, gather objective metrics, and distill customer feedback the better. It’s not always easy, but it’s always worth it.

About The Author, Joe Stump

Joe is a seasoned technical leader and serial entrepreneur who has co-founded three venture-backed startups (SimpleGeo, attachments.me, and Sprint.ly), was Lead Architect of Digg, and has invested in and advised dozens of companies.